Framing the AI Bubble

Putting some numbers around the AI bubble narrative

Welcome to Bristlemoon Capital! We have written previously on FICO, GOOGL, ASML, SNPS, UNH, META, GRND, HEM SS, MELI, U, APP, PDD, IBKR, PAR, AER, PINS, BROS, MTCH, CPRT, RH, EYE, and TTD.

If you haven’t subscribed, you can join 5,305 others who enjoy our deep dives and investment insights here:

Free subscribers will only receive a partial preview of our reports. The remainder of our reports, which contain the deeper analysis, are reserved for paid subscribers. Consider becoming a paid subscriber for full access to our reports.

Bristlemoon readers can also enjoy a free trial of the Tegus expert call library via this link.

Australian wholesale investors looking to invest in the Bristlemoon Global Fund can do so via this link.

Table of contents

Introduction

Are we in an AI bubble?

Demand

Willingness to pay

Unit economics

Supply

TSMC N2 capex

ASML EUV demand

Nvidia revenue outlook

Introduction

Over the past few weeks, we decided to take stock of the ongoing AI investment cycle in an attempt to gauge where we are in the cycle and how much further there is for fundamentals to run. Essentially, we wanted to understand the magnitude of AI capex baked into current share prices and whether there could be substantially more upside or downside to estimates and thus prices.

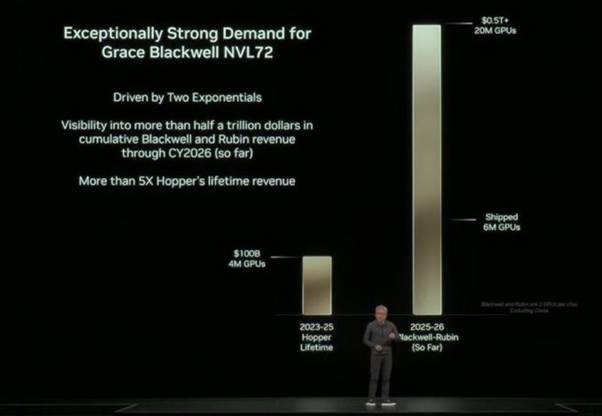

Unfortunately, Jensen Huang stole some of this post’s thunder with his revelation at GTC last week of $500 billion of booked Blackwell and Rubin orders to ship in CY 2025 and 2026. We arrive at similar magnitudes of revenue and explore what we think this means for a subset of the AI supply chain. We have limited exposure in the Fund to AI capex beneficiaries and instead have preferred to own early and obvious AI application (verb) winners. As such, this discussion is limited to the supply chain stocks that we are interested in (ASML, NVDA and TSMC), but the implications might be applicable to the AI cycle participants more broadly. As always, readers should do their own due diligence and draw their own conclusions.

The chart that pushed Nvidia to $5 trillion market cap

This exercise is also motivated by a quote from Eowyn, Shieldmaiden of Rohan: “The women of this land learned long ago that those without swords can still die upon them.”

In other words: if the AI capex beneficiaries leading the markets higher were to falter, we believe they could bring the entire market down with them; being diversified or underweight AI exposure is no consolation on the way up or down. It should not be lost on anyone that AI related stocks have accounted for 75% of S&P 500 returns, 80% of earnings growth and 90% of capex growth since ChatGPT launched in November 2022[1]. We also can’t ignore the development that in 1H 2025, AI capex contributed more to US GDP growth than the US consumer[2].

Are we in an AI bubble?

We don’t think hyperbole around the “AI bubble” (on both sides) is helpful or instructive for rational decision making, so we endeavored to approach this analysis from both a top down and bottoms up perspective while checking any preconceptions at the door.

Starting with the top down view, we compare the available data points on demand and supply for AI.

Demand

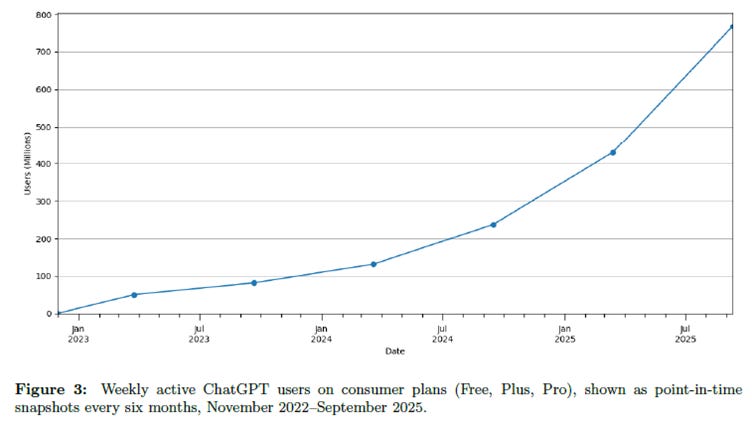

Consumer demand for AI remains robust. OpenAI reported 800 million weekly users in September, up from 500 million in March. Google just disclosed 650 million Gemini app MAU on its Q3 2025 call, up from 400 million at Google I/O in May. Over 2 billion monthly users benefit from AI Overviews, while the initial rollout of AI Mode reached 100 million users in July. Meta likewise has disclosed some big user numbers – over 1 billion Meta AI MAUs on its Q3 call.

Source: NBER “How people use ChatGPT”

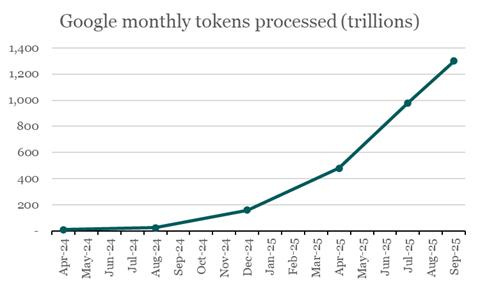

We know that WAUs and MAUs are metrics that don’t necessarily align with actual usage. For that, token demand is the better measure. Google has sporadically disclosed monthly tokens processed, and that number has grown exponentially. The company also disclosed that the Gemini API processes 7 billion tokens per minute, while OpenAI’s API processes 6 billion per minute – good for 300 trillion and 260 trillion tokens per month, respectively. Yes, tokens processed have been inflated by reasoning models, but we don’t have a breakdown of reasoning vs query growth. In any case, we believe higher quality output from small reasoning models vs non-reasoning models should drive greater AI usage.

Source: Bristlemoon Capital, Google

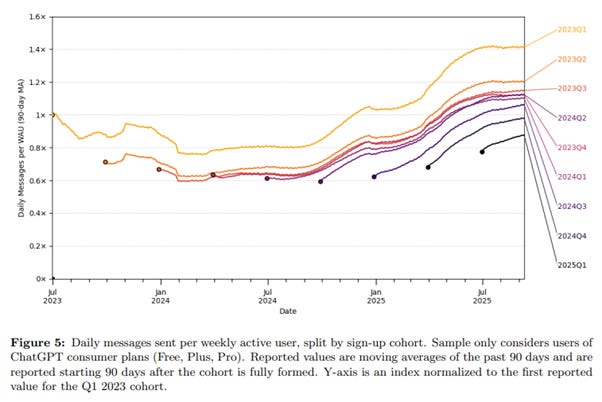

While OpenAI hasn’t consistently disclosed tokens processed, the “How people use ChatGPT” research paper by NBER does include data showing daily messages per WAU is increasing for all cohorts.

Source: NBER “How people use ChatGPT”

Earlier this month, there was some data from Apptopia (a third-party app intelligence firm) showing OpenAI app DAUs have plateaued since September, while average sessions and time spent have also declined. This period coincided with both the botched rollout of GPT-5 and Google’s launch of Nano Banana which pushed Gemini to the top of the app download charts, so we would be hesitant to exclaim “Aha! OpenAI has peaked!” on this data alone. Based on the most recent OpenAI and hyperscaler commentary, it appears that demand is still well in excess of supply and expected to remain so into 2026, despite the rapid AI server capacity additions.

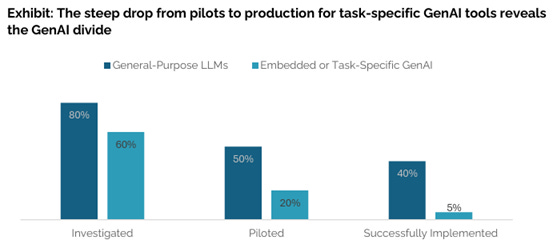

Enterprise AI demand has, unsurprisingly, lagged consumer adoption. One report recently making waves is a July study by MIT NANDA canvassing 1H 2025 that found 95% of enterprises were getting zero return on $30-40 billion of AI investment. To borrow the 20 second executive summary:

“Tools like ChatGPT and Copilot are widely adopted. Over 80 percent of organizations have explored or piloted them, and nearly 40 percent report deployment. But these tools primarily enhance individual productivity, not P&L performance. Meanwhile, enterprise grade systems, custom or vendor-sold, are being quietly rejected. Sixty percent of organizations evaluated such tools, but only 20 percent reached pilot stage and just 5 percent reached production. Most fail due to brittle workflows, lack of contextual learning, and misalignment with day-to-day operations.”[3]

Source: MIT NANDA

Our interpretation of the findings is that 1) enterprises are generally not ready to successfully implement AI, whether that be lack of sponsorship, data cleanliness, or resistance to change; and 2) the models – particularly narrow domain agentic models – are just not good enough yet. We don’t have a strong opinion on what enterprises can do to overcome the former limitations as each organization is different, but on the latter, we would just remind readers that the AI in deployment today is the worst that AI will ever be.

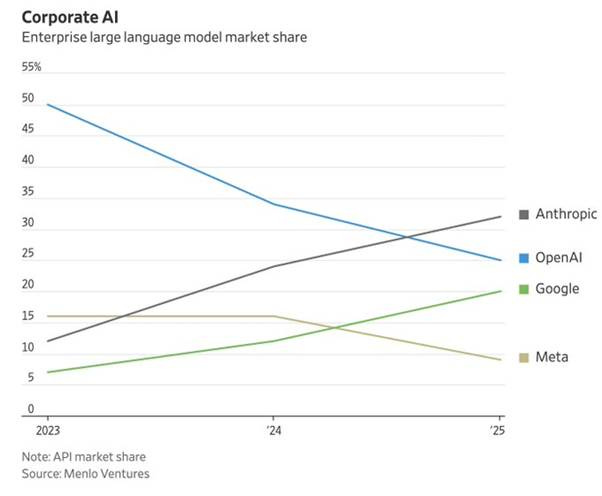

Yet even so, one very compelling enterprising use case for AI has already emerged – the AI coding agent. Many tech companies have revealed a high and growing percentage of their code base being initially written by AI coding agents (whether true or not, we can’t verify). Anthropic is the leader in this area and has just recently projected $20-26 billion in annualized revenue in 2026, up from $9 billion projected exiting 2025, and $1 billion in January 2025[4]. We believe a substantial majority of that revenue comes from enterprise, and specifically Claude Code. So, it seems that if an AI use case achieves strong product market fit, enterprises are willing to pay up for measurable improvements in productivity.

As an aside – AI bubble doomers often cite de minimis AI revenue as the key reason why the bubble must pop. Yet this MIT report analyzing 300 public GenAI implementations captured $30-40 billion of GenAI investments. Sure, a majority might be spent on headcount and consultants, but some AI service provider must be receiving some of this as revenue…

Willingness to pay

Having insatiable user or token demand is great, but means nothing and is unsustainable if given away for free. When we consider AI demand from a willingness to pay angle, the picture is perhaps somewhat less rosy. We think there is a strong case to be made that enterprises will pay if they see value in a product or service (basically the entire premise of the SaaS market). However, consumers evidently haven’t shown a strong willingness to pay for access to more capable models and higher rate limits.

It’s been reported[5] that of ChatGPT’s 800 million WAUs, only 5%, or ~40 million, are paying users. Subscriptions account for 70% of OpenAI’s $13 billion ARR, with API access contributing the remainder. This basically says to us that the 800 million users, taken as a whole, find ChatGPT no more valuable than a freemium mobile game, where a single digit percentage of “whales” account for substantially all a game’s revenue.

We would argue that ChatGPT provides more value than Candy Crush, but the difficulty is clearly in getting consumers to pay for the premium experience when a small, non-reasoning model is probably enough to satisfy the average user’s requirements. To that end, it is clear that OpenAI is preparing a shift towards an advertising model for ChatGPT, having hired Fidji Simo and several other Meta product alumni to build out the “applications” (i.e., non-research/AI) organization.

It remains to be seen whether OpenAI can build an advertising business rivaling the scale of Meta or Google, but the precedent of supporting $100+ billion annual capex buildouts using ad dollars is a strong one. Obviously, ad dollars don’t just materialize out of thin air, so unless AI adoption is driving incremental GDP growth, OpenAI will need to take share of ad budgets from somewhere.

Unit economics

Taking a step back, a lack of consumer willingness to pay should not be conflated with weak unit economics. The “AI is a bubble” justification we take the most issue with is this misconception (often magnified by the media) that serving AI models to users is a loss-making endeavor. This is most often misrepresented as spending $2 or $3 dollars to generate $1 of revenue, with the implication being that these AI labs will never be profitable.

A rudimentary understanding of accounting is all that is needed to disabuse oneself of this misrepresentation. The Information reported that OpenAI generated an operating loss of $7.8 billion in H1 2025 on $4.3 billion in revenue, good for $3 in costs to generate $1 of revenue[6]. However, buried at the end of the report is a line stating that cost of revenue was only $2.5 billion, meaning gross profit was $1.8 billion for a 42% gross margin. This indicates that serving current models to users carries positive unit economics, with substantially all the operating loss attributable to training the next generation of models. This means that in time and at scale, frontier model providers will be capable of generating positive net profit.

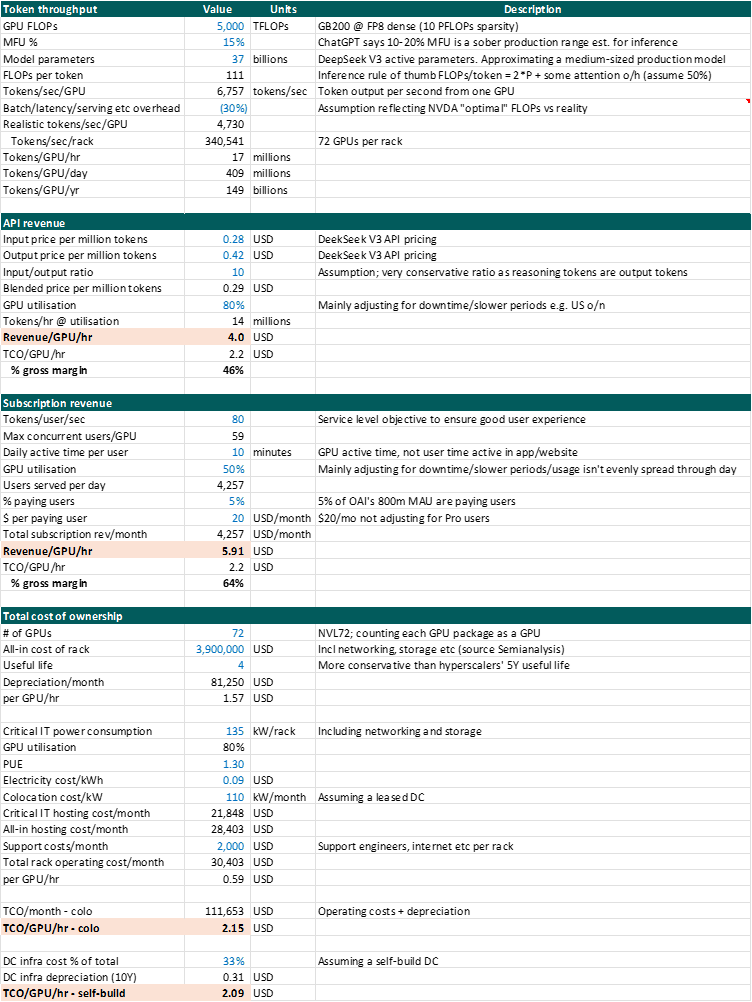

To understand just how profitable model inference can be, we ran our own back-of-the-envelope tokenomics analysis. Note there are a lot of assumptions that go into this – we are aiming for ballpark estimates here. In summary, depending on model size and API pricing or paying user mix, inference gross margins might range from ~50% to as high as ~80%.

Source: Bristlemoon Capital

For the purposes of the analysis, we used DeepSeek V3 to approximate a medium-sized Mixture of Experts model that might be used in production. We also assume DeepSeek V3 API pricing, which is extremely low compared to the closed source frontier model pricing (e.g. GPT-5 is $1.25/$10.00 input/output and GPT-5 mini $0.25/$2.00). We haven’t found any reliable information on the sizes of the various GPT-5 models or which models are used for ChatGPT, so we’ve gone for the most conservative assumptions that generate the most conservative revenues.

As we can see, even with some of the cheapest API pricing in the market, served on the most expensive (on a GPU/hour basis) AI servers currently available, inference via API still commands a respectable gross margin for the model provider. This of course assumes the provider also owns the servers (e.g. Google or Microsoft) – if they rent the servers from a CSP, the gross margin will be lower.

We can also see that for a subscription model where 5% of users are paying $20 a month, the service provider can still generate attractive gross margins depending on the level of utilization and other optimizations they can achieve. Apptopia data indicates US ChatGPT users spend 20-25 minutes a day in the app, so one could even argue that 10 minutes of GPU active time is excessive, especially for non-thinking chats (i.e., the majority of free ChatGPT usage). Of course, these are averages, and the many free users who might use ChatGPT once a week may be more than offset by the power users on the $200 pro tier.

One final thing to note for OpenAI specifically is that it has a revenue share with Microsoft of just under 20%, in addition to renting server capacity from Azure. Yet even with these incremental contra revenues and COGS, the company is still achieving a 42% gross margin. As that revenue share declines through 2030 and OpenAI starts owning more of its servers (the 16GW it has signed directly with Nvidia and AMD), we expect gross margin to expand, even before considering additional monetization avenues such as advertising.

Supply

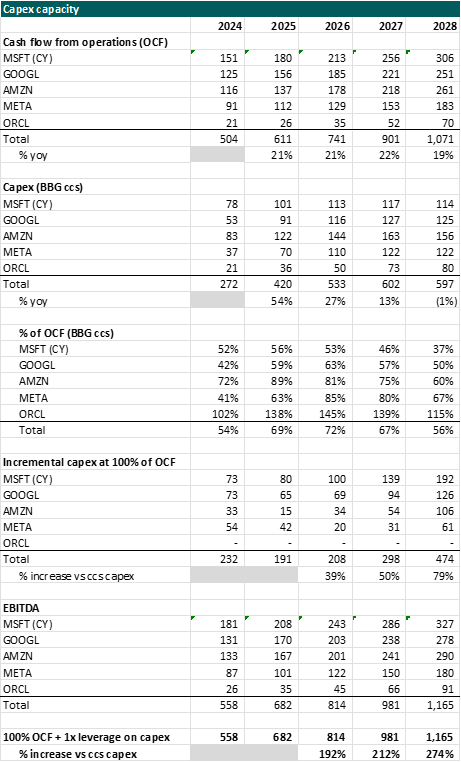

Finally, we get to token supply, or more accurately, the demand for AI infrastructure. We see two key debates here: 1) how much (more) will the hyperscalers spend in capex, and 2) what kind of ROI are they earning on this capex?

The debate over how much AI capex can or will be spent is, in our opinion, a straightforward one to settle. The hyperscalers – mostly comprised of the world’s biggest cash flow printing presses – see the pursuit of AGI as existential. Some are more fanatical (Meta, Google, Oracle) than others (Microsoft, Amazon) but all have said they would rather have too much compute than too little. The ones pursuing AGI in particular are caught in a prisoners’ dilemma where the Nash equilibrium is for all involved to invest as aggressively as possible in that pursuit.

These hyperscalers have significantly more capacity to spend. If we compare current Bloomberg sellside consensus operating cash flows to capex for the five hyperscalers, we see that capex is “just” ~2/3rd of operating cash flow for the group. Only Oracle is currently, and is projected to continue, spending more than its cash flow on capex. If the group were to spend up to 100% of their operating cash flows on capex (i.e. free cash flow = zero), current sellside capex estimates would need to increase by as much as 50%. If these companies were to collectively lever up to 1x EBITDA to invest in AI, their capex spend would be 3x higher than current estimates!

Note this doesn’t include any OpenAI investment in owned infrastructure (to the extent they can afford to pay for it…) nor any neocloud capex.

Source: Bristlemoon Capital, Bloomberg

How much the hyperscalers decide to invest in AI capex will ultimately come down to what return on investment they expect to generate. The ROI side of the debate is undoubtedly more subjective/biased because no one can predict with any degree of confidence what revenues might (or might not) be unlocked in future years as a result of ongoing AI investment. AI bulls will point to the acceleration of revenues at the hyperscalers (short term), plus historic precedent of the value unlocked by massive capex cycles (railroads, fiber etc; long term), as evidence of at least an acceptable level of ROI. Bears will point to the massive and growing operating losses reported by the frontier labs and say there is no ROI on AI investment.

Our opinion is that the returns will (for now) fall somewhere in the middle. We’ve established above that unit economics of serving inference are quite attractive, with the hurdle being a relatively low willingness to pay for today’s models. Outside of the standalone frontier labs (e.g. OpenAI, Anthropic), it is difficult to precisely ringfence how much revenue the hyperscalers are generating specifically from their AI investments. It is clear that Meta, Google, Azure and AWS are reporting accelerating revenue growth; what’s not clear is the extent to which AI is driving this acceleration.

We think it would be unfair to ascribe zero benefit to these companies’ AI investments, particularly for the likes of Meta and Google where AI-based recommender and ad models are clearly driving results. Likewise, it would be intellectually dishonest to claim that none of the AI capex is wasted and that these companies couldn’t have achieved similar results with some fraction of their current spend. For example, we know that Meta allocates some portion of its total AI server capacity to “core AI” (the ads business) and some portion to “gen AI” (the frontier superintelligence stuff), and that mix changes over time depending on the opportunity set.

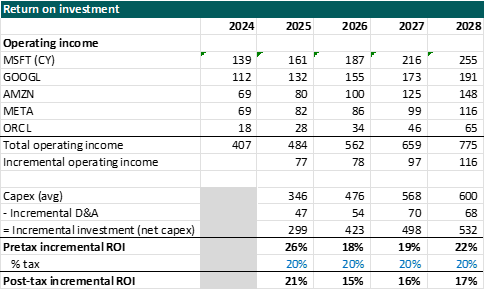

The simplest way we can think about ROI is to again look at sellside estimates. We know that the sellside capex forecasts broadly reflect the continuation of the current AI investment cycle (even though they tend to underestimate the capex). We strongly believe that, collectively, sellside are not explicitly modeling specific AI revenue streams for these hyperscalers. Rather, they are typically modelling company guidance and extrapolating growth rates into the future. As such, any AI monetization breakthroughs are not captured in sellside earnings estimates, while the capex burden is. Even so, we can see that the incremental ROI on the hyperscalers’ total capex is still expected to be decent, and certainly above their cost of capital (while returns for individual hyperscalers may vary).

Source: Bristlemoon Capital, Bloomberg

We think the market has gotten accustomed to these companies generating outlandishly strong ROIC year-in year-out with their increasing returns to scale and dominance of their massive end markets. We note the above ROI is incremental, meaning their strong historical ROIC is simply being diluted during this capex investment cycle, rather than being imminently reset downward to a mid-teens level. In any case, a mid-teens post-tax ROIC with nearly 100% reinvestment rate at the scale these companies are operating at would be a textbook example of an attractive “compounder”.

Should the sellside be underestimating the monetization potential of all this AI investment, the realized incremental returns will be even more attractive. But should this mostly cashflow-funded investment really be a waste of capital, these companies can reduce their capex by 20%, 30% or even 50%, without necessarily hurting the performance nor the fundamental attractiveness of their core businesses.

Now that we have established our position on AI demand and supply, we discuss in the paid section what we think this means for TSMC’s capex, the demand for ASML EUV tools, and implications for Nvidia’s revenue. Consider becoming a paid subscriber for full access to our analysis.